Welcome!

Hi! This is Elena, Ph.D. Student in Data Science in the University of Rome Tor Vergata and I am part of the Human Centric ART group.

My research revolves around Trustworthy AI principles, applied to language models. I have been working expecially on robustness, security, interpretability, and fairness. Recently, I have been focusing on studying and mitigating privacy risks in LLMs.

Latest and Highlights

ACL 2025 has been a blast! An exiciting conference, interesting papers and great chance to exchange ideas in a vibrant enviroment.

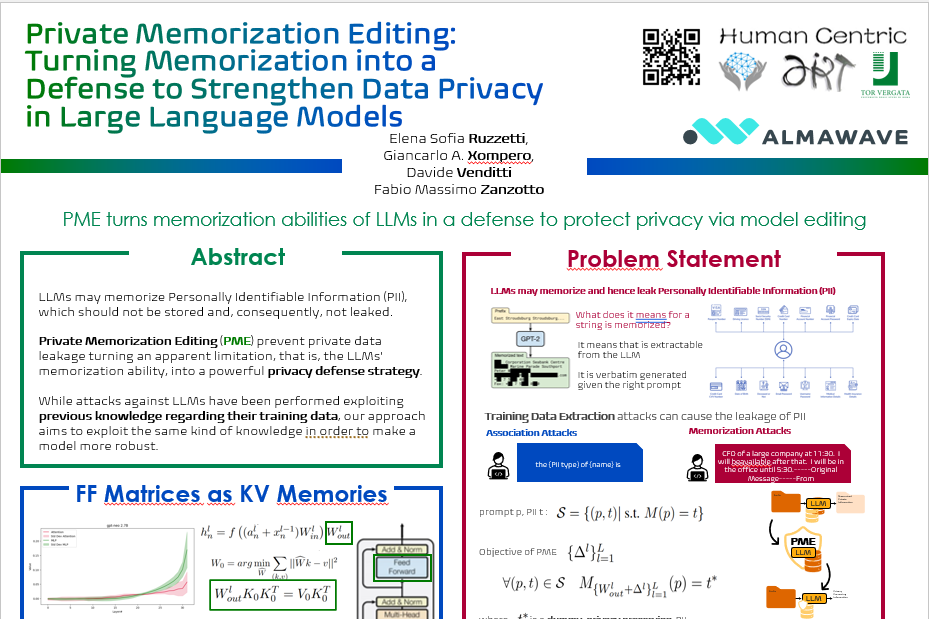

I am proud to say our model editing tecnique Private Memorization Editing (PME) captured the attention of those interested in efficient and precise edit of unwanted behaviours in LLMs! In the paper, we propose a model editing to tackle privacy issues, guided by a precise knowledge of the training data: privacy of data owners is preserved, without an impact on model utility.

News

🚀 June 30, 2025Fabio Massimo Zanzotto’s and I give a tutorial at IJCNN 2025! We will discuss memorization in Transformer-based Large Language Models and more.

🎉 May, 20252 Papers accepted at ACL 2025! Check out here the papers:

- Private Memorization Editing: Turning Memorization into a Defense to Strengthen Data Privacy in Large Language Models (Ruzzetti et al., ACL 2025)

- Position Paper: MeMo: Towards Language Models with Associative Memory Mechanisms (Zanzotto et al., Findings 2025)

Reach out in Vienna to have a chat! 😄 Excited to see you there!